Machine Learning Models Explained: How They Work

- BLOG

- Artificial Intelligence

- January 1, 2026

Machine Learning Models often work in the background, quietly influencing everything from product recommendations to financial decisions. They analyze data with the calm confidence of systems that never forget a pattern.

As organizations handle rising volumes of information, these models evolve from optional tools into dependable decision partners. They reveal trends, reduce repetitive work, and spot patterns that humans often miss, all while keeping operations consistent.

In this guide, you will get a clear breakdown of the models, their key components, major categories, strengths, and real-world uses. Thus giving you a practical understanding instead of another buzzword-filled overview.

Contents

- 1 What Are Machine Learning Models?

- 2 Key Components That Define a Machine Learning Model

- 3 Empower your business with smarter machine learning models today!

- 4 Classification of Machine Learning Models

- 5 Applications of Machine Learning Models

- 6 Strengths and Limitations of Major Machine Learning Model Families

- 7 What Webisoft Brings to Your Machine Learning Model Strategy

- 8 Empower your business with smarter machine learning models today!

- 9 Conclusion

- 10 Frequently Asked Question

What Are Machine Learning Models?

A machine learning model is a computational program that identifies patterns in data and uses them to make decisions or predictions on new, unseen information.

It represents a mathematical function learned from examples rather than being explicitly coded for every scenario. These models encapsulate relationships between inputs and outputs, enabling systems to generalize beyond the data they observed.

They are core tools in modern artificial intelligence for tasks such as classification, forecasting, and recognition. Unlike traditional software, machine learning models evolve with data and help automate complex decision-making in many domains.

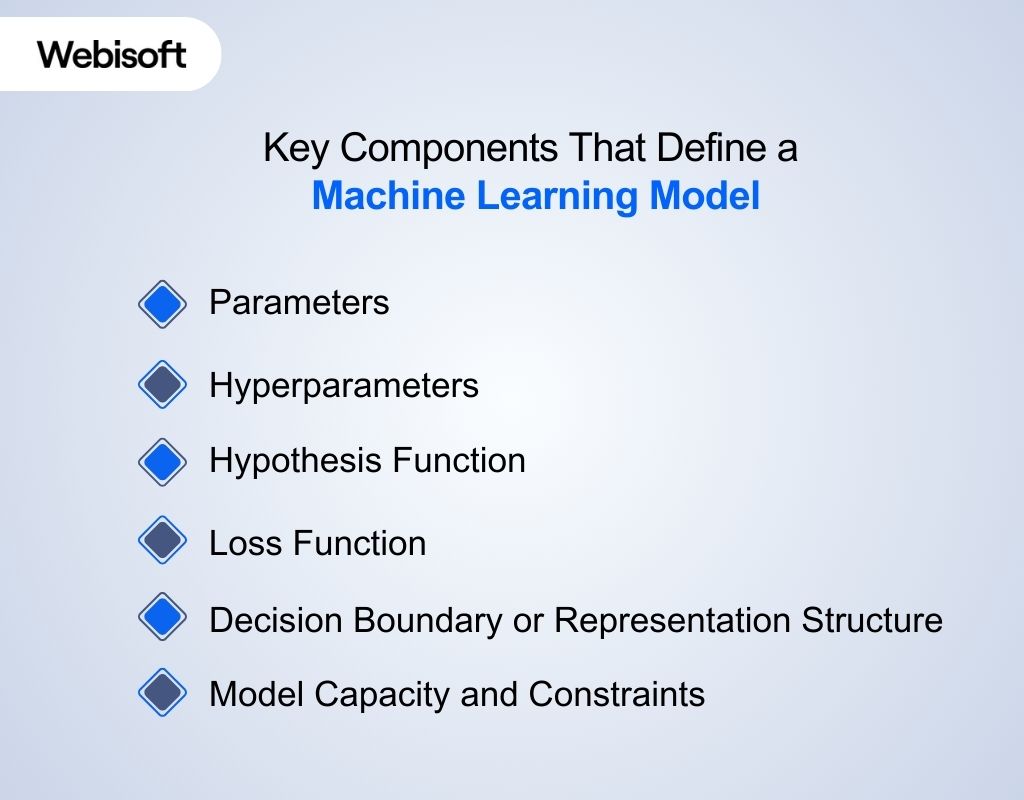

Key Components That Define a Machine Learning Model

Machine learning models share structural elements that shape how they represent information and make predictions. These components define the model’s behavior, flexibility, and capacity to capture patterns in data.

Machine learning models share structural elements that shape how they represent information and make predictions. These components define the model’s behavior, flexibility, and capacity to capture patterns in data.

Parameters

Values that determine the model’s internal structure. They define how inputs are transformed into outputs, as seen in linear weights, neural network connections, or probability distributions.

Hyperparameters

External configuration choices that control the model’s form or complexity, such as depth, regularization strength, or kernel type. These influence model capacity but are not learned from data.

Hypothesis Function

The mathematical form a model uses to map inputs to outputs. It represents the set of functions the model can express within its predefined structure.

Loss Function

A formal measure of how far the model’s predictions deviate from expected values. It guides how models evaluate the quality of their representation.

Decision Boundary or Representation Structure

The geometric or structural pattern the model forms to separate or organize data, such as linear boundaries, tree partitions, or neural feature hierarchies.

Model Capacity and Constraints

The extent of patterns a model can capture is limited by structural choices. Higher capacity models can represent more complex relationships.

Empower your business with smarter machine learning models today!

Work with Webisoft to build dependable, scalable, and high-value ML solutions!

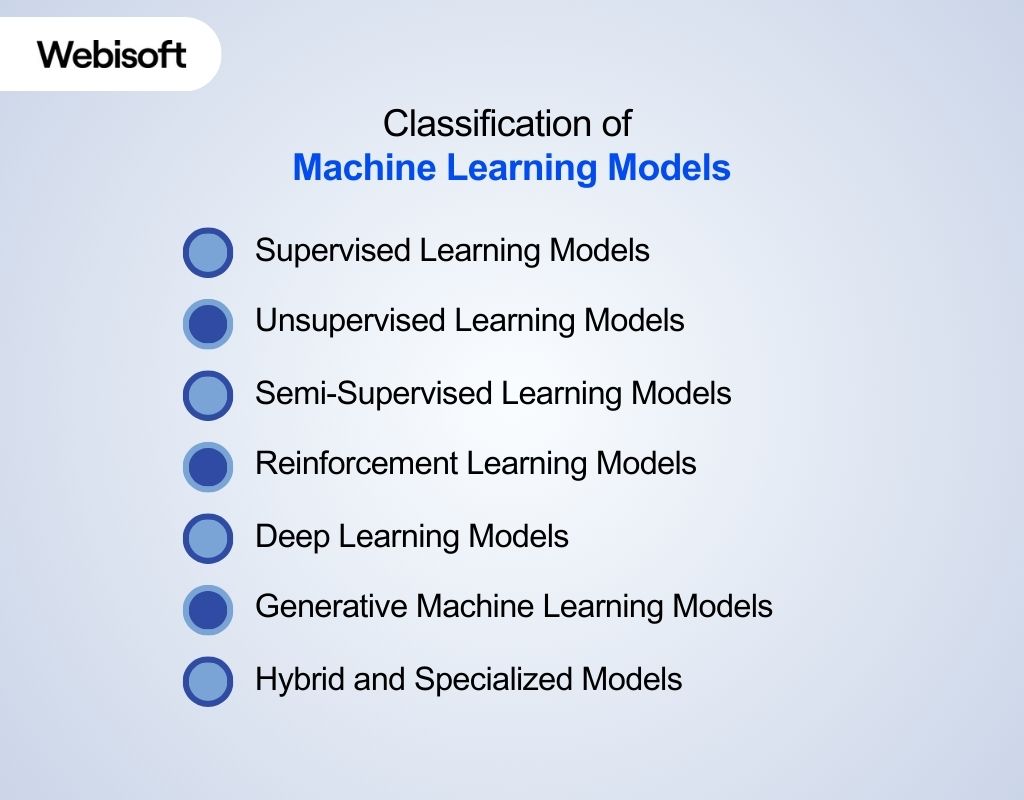

Classification of Machine Learning Models

Machine learning model is grouped into categories based on how they use data, the assumptions guiding their structure, and the representations they form to capture patterns. This becomes clearer when examining common machine learning models examples across these categories.

Machine learning model is grouped into categories based on how they use data, the assumptions guiding their structure, and the representations they form to capture patterns. This becomes clearer when examining common machine learning models examples across these categories.

1. Supervised Learning Models

Supervised models learn from labeled examples where every input is paired with a correct output. These models operate within a defined hypothesis space and aim to approximate an unknown target function.

Many teams refine these systems with help from a machine learning development company to ensure accuracy and scalability.

1.1 Regression Models

Regression models estimate continuous quantities such as prices, probabilities, or trends. They rely on structural assumptions about relationships between variables. Examples:

- Linear Regression: Assumes linear relationships and offers interpretability.

- Polynomial Regression: Models curved patterns by extending input features.

- Ridge and Lasso Regression: Add constraints that help prevent overfitting, making them suitable for noisy or high-dimensional data.

1.2 Classification Models

Classification models assign inputs to categories by learning decision boundaries. They differ widely in representation: geometric margins, probabilistic boundaries, or instance comparisons. Examples:

- Logistic Regression: Produces class probabilities via a sigmoid function.

- Support Vector Machines: Find maximal separating hyperplanes for strong decisions.

- Decision Trees and Random Forests: Learn hierarchical partitioning of input space.

- Gradient Boosting Models: Combine many weak learners into a strong predictive model.

These models perform best when historical labeled data is abundant and the goal is prediction.

2. Unsupervised Learning Models

Unsupervised models analyze unlabeled data to uncover latent structures. Research literature shows that these models are valuable when patterns exist but outcomes are unknown.

2.1 Clustering Models

Clustering models group samples by similarity, revealing natural structure in complex datasets. Examples:

- K-Means: Partitions data into K compact clusters.

- DBSCAN: Identifies clusters of different shapes using density-based rules.

- Hierarchical Clustering: Constructs nested clusters for multi-level insights.

2.2 Dimensionality Reduction Models

These models compress high-dimensional data into smaller, informative representations. Examples:

- PCA: Projects data into orthogonal directions capturing maximum variance.

- t-SNE / UMAP: Capture non-linear structure for visualization.

2.3 Density and Anomaly Models

These models characterize distributions to detect rare or unusual patterns. Examples:

- Gaussian Mixture Models: Represent data as a mixture of Gaussians.

- Isolation Forest: Isolates anomalies using random partitioning.

- One-Class SVM: Learns boundary around normal data.

Unsupervised models help with segmentation, noise reduction, and exploratory analysis.

3. Semi-Supervised Learning Models

Semi-supervised models operate between labeled and unlabeled settings. They rely on assumptions like cluster consistency or smoothness, meaning similar points should receive similar labels. Common approaches:

- Self-Training Models: Use a model’s confident predictions to expand the labeled dataset.

- Co-Training Models: Use two different feature sets or perspectives to refine labels.

- Graph-Based Models: Propagate label information across connected samples.

These models are particularly useful when labeled data is costly but unlabeled data is abundant. When additional data preparation is needed, organizations often turn to broader AI and ML development support that includes workflows for handling labeled and unlabeled datasets.

4. Reinforcement Learning Models

Reinforcement learning models learn through interaction rather than static datasets. They estimate long-term rewards and optimal actions, making them suitable for sequential decision problems.

4.1 Value-Based Models

Predict how good a state or action is. Examples:

- Q-Learning: Builds a table of expected rewards.

- Deep Q Networks (DQN): Use neural networks for large state spaces.

4.2 Policy-Based Models

Learn a direct mapping from states to actions without estimating value functions. Examples:

- Policy Gradient Models: Adjust parameters to increase expected reward.

4.3 Actor-Critic Models

Combine both value estimation and policy learning for stability and efficiency. These models power robotics, control systems, and game-playing agents.

5. Deep Learning Models

Deep learning models use multi-layer neural architectures that learn increasingly meaningful representations from data.

Additional learning materials are available through DeepLearning.AI resources, which help explain how these architectures evolve across different domains.

These models capture complex patterns by transforming inputs through several stages of abstraction, making them effective for tasks involving images, language, audio, and high-dimensional signals.

5.1 Feedforward Networks

Feedforward networks move information from input to output without loops. They learn general relationships in structured or tabular data and form the foundation for many deeper neural architectures.

5.2 Convolutional Neural Networks (CNNs)

CNNs apply sliding filters to detect visual features such as edges, textures, and shapes. As layers stack, they recognize increasingly detailed patterns, making them highly effective for image classification, object detection, and vision tasks.

5.3 Recurrent Networks (RNN, LSTM, GRU)

Recurrent networks maintain memory of previous inputs, allowing them to process sequences like text, sensor readings, or audio. LSTM and GRU variants handle longer-range dependencies more effectively by controlling how information flows over time.

5.4 Transformers

Transformers use attention mechanisms to analyze all parts of a sequence at once. This enables fast, accurate modeling of long-range relationships, making them the leading architecture for natural language processing and many multimodal tasks.

5.5 Autoencoders

Autoencoders learn compact representations by encoding data into a smaller form and reconstructing it. They help with anomaly detection, noise reduction, and feature extraction, especially in unsupervised learning settings.

5.6 Generative Adversarial Networks (GANs)

GANs pair a generator that creates synthetic data with a discriminator that evaluates its realism. Through this competition, GANs produce highly realistic images, audio, and other synthetic outputs used in simulation and creative workflows.

6. Generative Machine Learning Models

Generative models learn to approximate data distributions rather than predict labels. They produce new samples that resemble real-world data. Key families include:

- Variational Autoencoders: Learn probabilistic latent spaces.

- GANs: Generate high-fidelity synthetic data via generator–discriminator competition.

- Diffusion Models: Create images and signals by iteratively reversing noise.

- Autoregressive Models: Model sequential dependencies (e.g., text, audio).

Generative models support simulation, content generation, augmentation, and creative applications.

7. Hybrid and Specialized Models

Some widely used model families span multiple categories:

- Ensemble Models: Combine predictions of multiple learners to increase stability.

- Graph Neural Networks: Operate on graph-structured data.

- Probabilistic Graphical Models: Encode conditional dependencies formally.

These models extend classical boundaries and support emerging AI applications. They are often included in a broader machine learning models list that highlights how specialized approaches complement traditional model families.

If you want guidance choosing or implementing the right machine learning model, Webisoft can help. Connect with our team to discuss your project and explore how customized solutions can support your technical and business goals.

Applications of Machine Learning Models

Machine learning model support many tasks by analyzing data and generating meaningful outputs. These capabilities often become part of broader AI-powered automation solutions that improve operational processes.

Machine learning model support many tasks by analyzing data and generating meaningful outputs. These capabilities often become part of broader AI-powered automation solutions that improve operational processes.

Each model family fits specific scenarios, and various machine learning models types address different patterns in the data.

1. Supervised Learning Models

Supervised models learn from labeled examples and produce predictions or classifications. Applications:

- Fraud detection systems that flag suspicious transactions

- Medical diagnostic tools that identify high-risk cases

- Customer segmentation for targeted marketing

- Spam and threat detection in communication platforms

- Quality inspection through labeled defect patterns

2. Regression Models

Regression models predict continuous numerical values and estimate trends. Applications:

- Forecasting sales, demand, and resource usage

- Risk scoring in finance and insurance

- Price estimation for retail, travel, and logistics

- Energy consumption prediction for planning systems

- Performance prediction in industrial monitoring

3. Classification Models

Classification models assign inputs to predefined categories. Applications:

- Email filtering and document categorization

- Disease classification from medical indicators

- Image classification in digital asset workflows

- Customer churn prediction systems

- Security threat classification in access control

4. Unsupervised Learning Models

Unsupervised models uncover hidden patterns in unlabeled datasets. Applications:

- Market segmentation based on behavioral similarity

- Anomaly detection in network, sensor, or financial data

- Grouping customers by purchasing patterns

- Data simplification for exploration and visualization

- Detecting structural clusters in scientific datasets

5. Dimensionality Reduction Models

These models compress high-dimensional data into compact forms. Applications:

- Visualization of complex datasets in research

- Noise reduction in signals and images

- Preprocessing for efficient model training

- Feature extraction for downstream tasks

- Pattern discovery in large scientific systems

6. Deep Learning Models

Deep models use neural architectures to capture complex patterns. Applications:

- Image recognition, defect detection, and object tracking

- Speech recognition and audio classification

- Text interpretation in support, search, and moderation

- Autonomous driving perception tasks

- Medical imaging analysis for anomaly detection

7. Generative Models

Generative models create new data samples that resemble real ones. Applications:

- Producing synthetic training data when real data is limited

- Image, audio, and design generation for creative workflows

- Simulations for testing robotics or control algorithms

- Data augmentation in vision and language pipelines

- Style transfer and media enhancement

8. Reinforcement Learning Models

Reinforcement learning models learn strategies through interaction and rewards. Applications:

- Robotics navigation and manipulation tasks

- Autonomous vehicle decision-making

- Real-time optimization in manufacturing and logistics

- Adaptive control in energy and environmental systems

- Game-playing agents for simulation and research

Strengths and Limitations of Major Machine Learning Model Families

Different machine learning models offer distinct advantages based on how they represent information and the assumptions they rely on.

Different machine learning models offer distinct advantages based on how they represent information and the assumptions they rely on.

Each types of machine learning models also carries limitations that affect how well it performs under specific data conditions.

1. Linear Models

Strengths:

- Easy to interpret due to straightforward relationships between inputs and outputs.

- Train quickly and work well with high-dimensional data when patterns are simple.

- Require fewer computational resources and handle real-time use cases effectively.

Limitations:

- Struggle with non-linear or complex relationships.

- Sensitive to outliers without proper preprocessing.

2. Tree-Based Models

Strengths:

- Capture non-linear patterns through hierarchical splits.

- Work with mixed data types and require minimal feature engineering.

- Ensemble versions like Random Forest and Gradient Boosting offer strong accuracy and stability.

Limitations:

- Single trees overfit easily and may produce unstable results.

- Ensemble models can become large and harder to interpret.

- Performance may degrade on very high-dimensional sparse datasets.

3. Instance-Based Models (e.g., KNN)

Strengths:

- Simple design with no explicit training phase.

- Adapt well to local structure and flexible decision boundaries.

- Useful when decision rules must closely follow observed examples.

Limitations:

- Slow predictions on large datasets due to distance calculations.

- Sensitive to noise and irrelevant features.

- Require careful scaling and distance metric selection.

4. Probabilistic Models

Strengths:

- Provide uncertainty estimates that support risk-aware decision-making.

- Work well when data fits known distributions or independence assumptions.

- Efficient training with small datasets.

Limitations:

- Assumptions are often violated in real-world settings.

- Struggle with complex, high-dimensional patterns.

- May oversimplify relationships due to rigid distributions.

5. Deep Learning Models

Strengths:

- Learn complex, layered representations that capture patterns traditional models miss.

- Handle images, audio, text, and other unstructured data with exceptional performance.

- Scale effectively with large datasets and modern hardware.

Limitations:

- Require significant data and computational resources.

- Hard to interpret due to layered, non-linear structures.

- Sensitive to hyperparameter choices and prone to overfitting without proper controls.

6. Generative Models

Strengths:

- Produce realistic synthetic data and creative outputs.

- Model full data distributions rather than only predicting labels.

- Support tasks such as simulation, augmentation, and content generation.

Limitations:

- Difficult to train and tune due to unstable optimization dynamics.

- Require large, diverse datasets for high-quality outputs.

- Risk producing unrealistic or biased samples if training data is limited.

7. Reinforcement Learning Models

Strengths:

- Learn sequential decision strategies through interaction.

- Adapt to changing environments by optimizing long-term reward.

- Effective in robotics, control systems, and autonomous decision-making.

Limitations:

- Require many interactions to learn stable policies.

- Sensitive to reward design and environment variability.

- Challenging to deploy in real-world systems with unpredictable conditions.

What Webisoft Brings to Your Machine Learning Model Strategy

Machine learning models reach their full potential only when aligned with real business needs. Effective use of machine learning models and algorithms requires thoughtful design and refinement, and this is where Webisoft helps transform models into dependable, scalable intelligence systems.

Machine learning models reach their full potential only when aligned with real business needs. Effective use of machine learning models and algorithms requires thoughtful design and refinement, and this is where Webisoft helps transform models into dependable, scalable intelligence systems.

Builds End-to-End Machine Learning Solutions

Webisoft supports the entire lifecycle: strategy, data readiness, custom model development, integration, and refinement. This creates a complete path from concept to deployment.

Delivers Custom Machine Learning Model That Fit Your Business

Instead of generic templates, Webisoft builds customized ML models that reflect the data, workflows, and industry constraints of your organization. This ensures that the model’s behavior matches your operational goals.

Integrates Models Into Your Existing Systems

Our team connects ML models directly to tools you already use, including ERP, CRM, automation systems, and analytics pipelines. This makes insights accessible and actionable within daily operations.

Supports Scalable, Production-Ready AI Infrastructure

Webisoft helps deploy machine learning models on infrastructure built for reliability, monitoring, and performance. This includes ongoing model evaluation and retraining pipelines that keep your systems accurate as data evolves.

Turns Machine Learning Into Real Business Impact

The focus is not just on technical delivery but on measurable outcomes: improved decisions, better forecasting, automated processes, and operational efficiency. Webisoft positions machine learning as a growth driver rather than an isolated tool.

Empower your business with smarter machine learning models today!

Work with Webisoft to build dependable, scalable, and high-value ML solutions!

Conclusion

Reaching this point means you now hold a clearer view of how machine learning models fit together, where each one excels, and how they support practical decision-making. The confusion that often surrounds this topic starts to fade once the moving parts fall into place.

And if you want these insights to evolve into real solutions, the journey is much smoother with the right partner. Webisoft helps teams move from understanding to implementation, shaping systems that run reliably, scale with your needs, and deliver long-term value.

Frequently Asked Question

Do all machine learning models require feature engineering?

No. Some models, such as deep learning architectures and many tree-based methods, automatically learn useful representations from raw data. Traditional models still depend on manually crafted features, making performance highly sensitive to preprocessing quality and domain-specific feature construction.

Can machine learning model explain their decisions?

Some models are inherently interpretable and provide clear decision logic, such as linear models and decision trees. Others, including deep learning and ensemble methods, require additional tools or techniques to understand their internal reasoning and extract meaningful explanations for predictions.

How stable are machine learning models over time?

Model stability depends on how consistent the underlying data remains. Shifts in patterns, user behavior, or external factors can degrade performance.

Regular evaluation, monitoring, and selective retraining help maintain accuracy in environments where conditions frequently change.